This is a segment from The Drop newsletter. To read full editions, subscribe.

Decentralized cloud computing provider io.net, which has its own IO token, is working with the Walrus team to let startups train, run and store their own custom AI models.

IO offers a network of GPUs for AI training and fine-tuning, while Walrus is enabling AI model storage in the deal. The integration will be available as a pay-per-use offering, meaning builders are only charged for the amount of storage and computing power they use.

This Bring Your Own Model (BYOM) offering lets AI agent devs or AI app builders develop and operate AI models without needing to set up their own data centers or hardware to do it.

IO says it has over 10,000 GPUs and CPUs globally.

Walrus and IOo’s BYOM offering will have to compete with other AI developer cloud services, though, like those from Bittensor, Lambda, Spheron, Akash, Gensyn, Vast AI, and Google’s Vertex products.

“Traditional centralized cloud models are not only expensive — they come with significant privacy risks and limited composability options that are challenging for developers who prioritize decentralization,” said Rebecca Simmonds, Managing Executive at Walrus Foundation.

“By leveraging our decentralized data storage solution, io.net will be able to provide the necessary compute power for advanced AI and ML development without any of the drawbacks of traditional models, making this a clear win for developers, users, and the entire Web3 industry,” the exec continued.

The internet can feel very centralized when giant cloud services experience outages, like what happened to Google last week (and impacted Cloudflare as well as a range of other apps and sites). That’s one obvious reason why centralized AI compute might not be ideal.

Walrus’s mainnet launched in March, with its main pitch being programmable, decentralized storage. The Walrus Foundation announced a raise of $140 million that month.

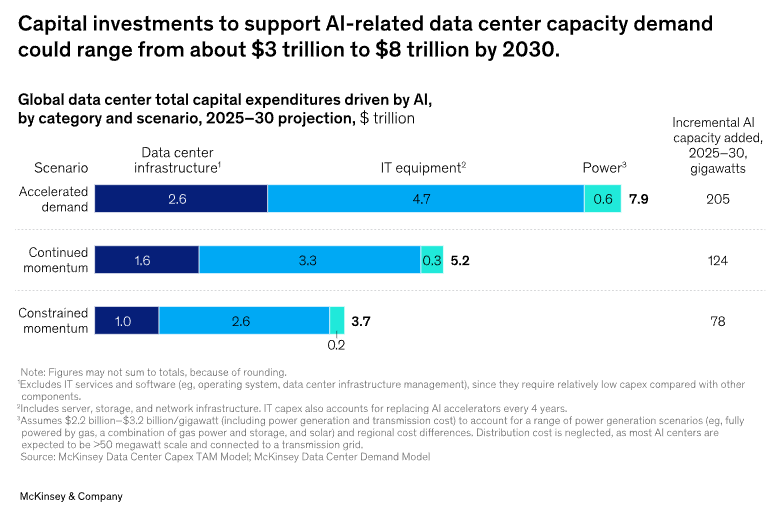

More broadly, the need for computing power for AI is expected to keep increasing annually. McKinsey researchers predict that data centers are going to need $6.7 trillion globally to keep up with demand by 2030 (though there’s a range of possible scenarios they modeled below).